Hex Tactics: AI Extension

Proudly Presenting: Hex Tactics

Overview

This devlog is about Hex Tactics, a turn-based mech tactics game developed over 5 weeks in four distinct phases. The last post covered the first phase which was primarily dedicated to rapid prototyping of core mechanics. In this post, we’re covering the second phase focused on AI extension for the computer player.

- Rapid Prototype [14 Days]

- > AI Extension < [6 Days]

- Polish Phase [6 Days]

- Procgen Iteration [10 Days]

Beyond serving as an exercise in rapid development, one of my key motivations for the project was exercising techniques in Artificial Intelligence (AI). The first phase of development had produced a reasonable opponent operating on a decision tree. At this stage, I was interested in exploring a Utility-based system for handling which actions the computer player’s units would take. For such a system to offer much benefit beyond the existing implementation, the game’s mechanics would need to be extended a bit as well. Doing so would produce a new difficulty mode with a more challenging AI opponent which, with the help of some extra polish, would come across as both more intelligent and intelligible to the player.

Take Cover!

Defense Bonuses

The first day of this phase was spent introducing terrain-based defense bonuses. This would not only add some extra depth to the player’s own strategies but also to that of the AI opponent. The default attack damage would now take 2 health points. However, if the targeted unit was on a partially forested hex tile, the damage received would be reduced to losing just 1 health point.

Terrain types like "Grassy Sand with Palms" reduce damage received by 50%

Defense bonuses tied to the terrain now meant that each hex tile had an inherent strategic value. Even if a unit wasn’t currently on a tile with a defense bonus, there would still be some value to being nearby one or more that did, since they might reach one on their next turn. Hence, if the AI was constructed to both prefer positions on defensive tiles and otherwise seek greater proximity to them, this consideration could create a much more interesting opponent with the illusion of long-term planning capabilities.

This situation was ripe for what’s known as an “influence map”. Each hex of the map can essentially have a score representing its strategic value. In this case, we want to calculate a score based on the given hex’s distance to the nearest defense bonus. Since movement costs differ per terrain type and we want to consider multiple potential destinations, we can use a variation of Dijkstra’s algorithm...

Red Blob Games has an excellent introductory article on pathfinding systems, including an explanation Dijkstra's algorithm

As mentioned in the previous post, I had used a traditional form of Dijkstra’s algorithm for obtaining movement range. With that use case, the function effectively starts at the mech’s current position, expanding outward while keeping track of the weighted distance from the origin. For this use case, we can do the reverse. Iterating through each of the nearby hexes with a defense bonus, we can conduct expansions starting from these potential destinations. Each expansion only proceeds while the distance from the current destination is less than the best distance found in previous iterations of the loop for other destinations. Thus, with judicious use of early exit conditions, this approach can be much more efficient than if we conducted multiple independent passes over the same hex ranges.

There’s much more I could say on just this one function. If you’re interested in the specifics of the implementation, leave a comment below! I may circle back to write up a technical post covering this distance map function in more detail, especially if there’s expressed interest.

Crunching the Numbers

Utility Scores

Now that we can efficiently determine the distance from any hex position to its nearest neighbor with a defense bonus, we can produce a score to represent this aspect of its strategic value. The shorter the distance to a defensive hex, the more valuable it should be. With that in mind, we can calculate a score based on 1 - (distanceToNearestDefenseHex / (maxMovement * 2)) where the values range from 0.0 to 1.0. A hex that itself offers a defense bonus will have a distance of 0.0, so it gets a full score of 1.0. Meanwhile, we want to capture the idea that a defensive tile can still be valuable if it can be reached the next turn, so divide by the max distance that can be traversed over two turns.

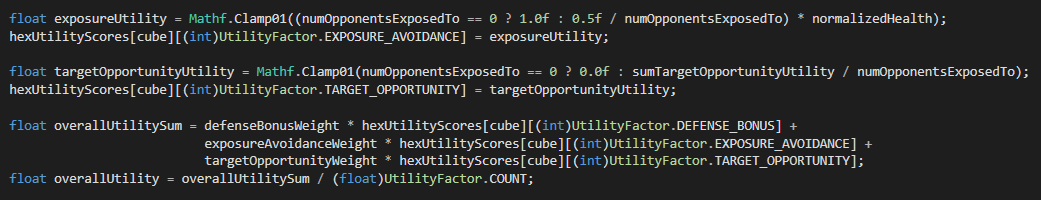

There are of course other considerations besides the availability of defense bonuses that our AI can take into account. In a similar fashion, we can produce scores for other factors as well. For instance, a unit should probably try its best to avoid getting surrounded and minimize threats. We could calculate an exposureAvoidance score where the fewer opponents that have line of sight on the unit the better, and the lower the unit’s health is, the more it should be concerned. A unit should also seek out enemies, attempting to maximize the damage it deals to them. In this case, a targetOpportunity score could be calculated, favoring positions that offer line of sight on the most vulnerable targets.

In this code snippet, cube refers to a cube coordinate value

The beauty of utility-based systems is that we don’t have to pick just one score to inform the AI’s decision making — we can combine each of these considerations! Having calculated each factor, we can unify them in a single weighted average that becomes the overall utility score for a given hex. The AI Manager then compares these scores across all reachable hexes, and the winner is where the unit moves to. The highest scoring hex could even be the one that the unit is already standing on, in which case the unit simply stays put. Finally, for its attack action, the AI can leverage the same data it evaluated for the targetOpportunity score to choose a target when available.

This approach with utility scores produces a highly flexible system that can be tweaked, extended, and repurposed in a variety of ways. For instance, the weights of each utility factor could be made to vary from unit to unit, such that one might have a greater bias toward exposureAvoidance to express a more timid personality. Other factors could be calculated and later added to the averaged series, especially as new mechanics are implemented which must then be considered. Utility systems are an effective tool that can be used in other contexts too, such as dialogue branching, dynamic difficulty balancing, and multiplayer matchmaking systems.

For an excellent primer on utility systems with tips and best practices, I recommend checking out An Introduction To Utility Theory by David "Rez" Graham in Game AI Pro

A New Foe Has Appeared...

AI Extended

With the utility system implemented, this provided a new and improved version of the AI for conducting the enemy units’ turns. I had made a point of developing this new version in such a way that the interface called upon by the GameManager could remain the same, while internally, each of the core MechAIManager functions were reproduced for the utility-based approach. Initially, this was helpful for development purposes such that I could incrementally replace function calls with the new versions while continuing to use the old versions for the remaining subsystems. By the end, this presented an opportunity, such that with a toggleable variable, the MechAIManager could execute either the old version for what would become the “Easy” difficulty mode, or otherwise execute the new version for the “Moderate” difficulty mode.

All that was needed to support both modes meaningfully in game was a user interface. I decided I would give the player the option to pick which difficulty mode they wanted to play at the start of each mission. Whichever selection the player makes determines which mode the AI executes, and sure enough, the “Moderate” difficulty mode is definitely more challenging.

I've left the "Hard" mode as an opportunity for my future self to explore, perhaps as a Monte Carlo implementation

With two days remaining in this phase of development, I wanted to ensure that I dedicated effort to a key principle of designing AI for games: an AI opponent should not only be a challenge, but also ought to be fun to play against. This may sound obvious, but the key distinction is that challenging does not always equal fun. In the same way that a puzzle game is much less fun without clues (otherwise devolving into sheer trial and error), an AI opponent is often much more interesting to play against when it provides some indication of how it operates. This allows the opportunity for a player to better intuit how their opponent behaves and then exploit that knowledge. When done right, it leaves the player feeling especially clever for having anticipated their enemy’s actions to outwit them.

To that end, the last feature I implemented for this phase was a dialogue system. At the start of the AI opponent’s turn, a short line of speech would display on screen, telegraphing what the AI was doing. In particular, the phrase rendered reflects both the action and the relevant decision-making factor(s) to give it context. For example, preceding an action that reduces exposure, the AI might utter “Minimizing liabilities…” or when the AI has chosen to fire on the closer of two targets, the player may see “Targeting proximal threat…” Each distinct context has a few options of phrases to choose from for variety. If the player pays attention, they can begin to form a sense of how they might exploit the AI’s behavior patterns.

This unit is repositioning in order to gain line of sight on its next target.

When All Is Said and Done

Conclusion

These features concluded the development phase dedicated to AI Extension. The game now offered greater depth both to the gameplay for the player and to the strategy of the AI opponent. The player could at this point choose between multiple difficulty levels for varying degrees of challenge. And the new dialogue system served to convey the AI’s decision-making, providing a more engaging opponent as well.

If you’d like to play this version of the project, check out the download labelled Hex Tactics [Extended AI] 2020-11-28.

Keep an eye out for the next post, in which I’ll cover the following phase dedicated to adding audio and enhancing the user experience!

Get Hex Tactics

Hex Tactics

AI & Procgen rapid prototyping demo

| Status | Released |

| Author | Robert Ackley |

| Genre | Strategy |

| Tags | Hex Based, Isometric, Mechs, Sci-fi, Singleplayer, Tactical, Top-Down, Turn-based, Turn-Based Combat, Unity |

| Languages | English |

More posts

- Introducing: Hex TacticsDec 28, 2020

Leave a comment

Log in with itch.io to leave a comment.